Adding README

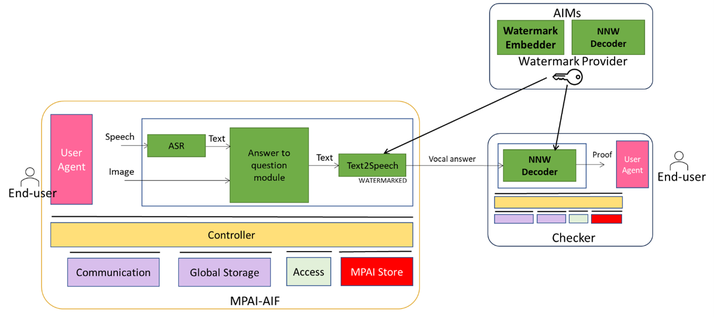

Adding AIW multimodal question answering watermarking model Update of the controller.py

README.md

0 → 100644

controller_NNW.py

0 → 100644

resources/MPAI_NNW-MQA.png

0 → 100644

58.3 KB

resources/MPAIui.py

0 → 100644

1.98 MB

File moved

File moved

File moved

File moved

File moved

File moved

File moved

File moved

File moved

File moved