Initial commit

parents

README.md

0 → 100644

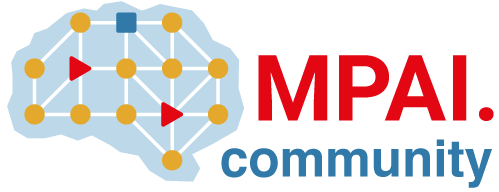

_pics/additional_drivers.png

0 → 100644

107 KB

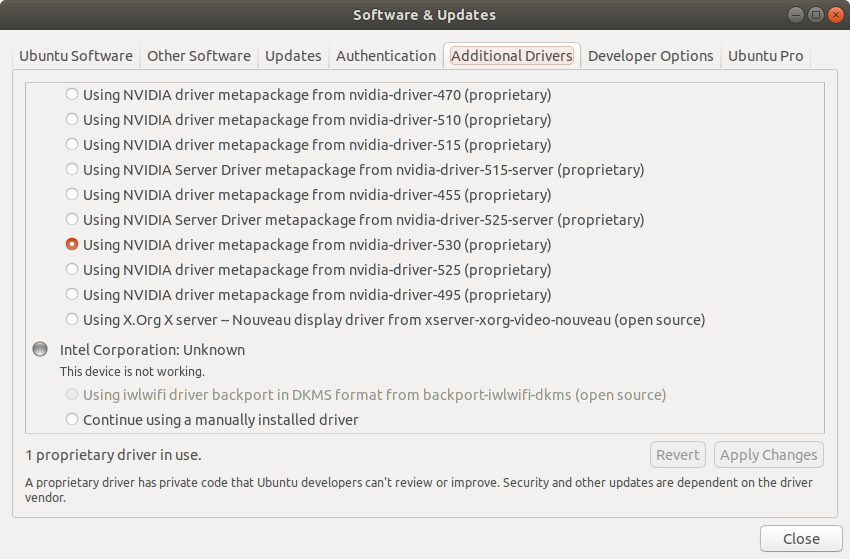

_pics/ctrler_payload.png

0 → 100644

46.6 KB

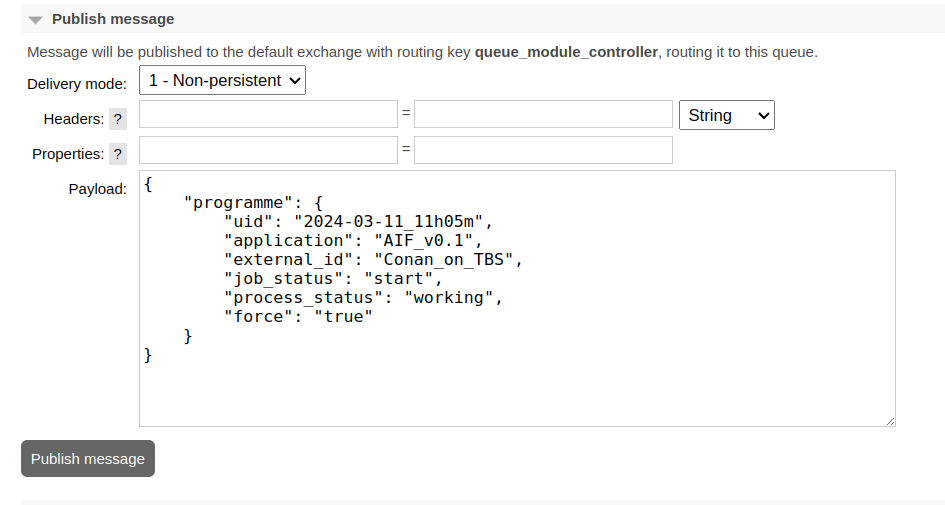

_pics/entities.jpg

0 → 100644

92.3 KB

_pics/workflow.jpg

0 → 100644

71 KB

compose.yml

0 → 100644

controller/Dockerfile

0 → 100644

controller/requirements.txt

0 → 100644

controller/src/main.py

0 → 100644

controller/src/run_funs.py

0 → 100644

examples/README.md

0 → 100644

examples/rabbit_in_1.json

0 → 100644

examples/rabbit_in_2.json

0 → 100644